Contents

Introduction

Update: Initial version was based on a tinygrad version released in February 2021. Updated to recent version on 2022-06-26

Apparently, it seems to be just another deep learning framework. Well, not exactly. It is a few orders of magnitude smaller and simpler than e.g. PyTorch. Everyone who ever tried to read the low-level source code of PyTorch or TensorFlow would appreciate that. It certainly promises a low barrier of adding more accelerators.

Due to its extreme simplicity, it aims to be the easiest framework to add new accelerators to, with support for both inference and training.

Where does the name come from?

tinygrad will always be below 1000 lines. If it isn’t, we will revert commits until tinygrad becomes smaller.

I think that this is a bit too ambitious unless you split it into various packages to add e.g. support for various accelerators and outsource e.g. model structures to config files and load it from there or so. But yes, it certainly sounds interesting.

Update: seems like I was not too wrong here:

The sub 1000 line core of it is in tinygrad/

The acceleration stuff moved to accel.

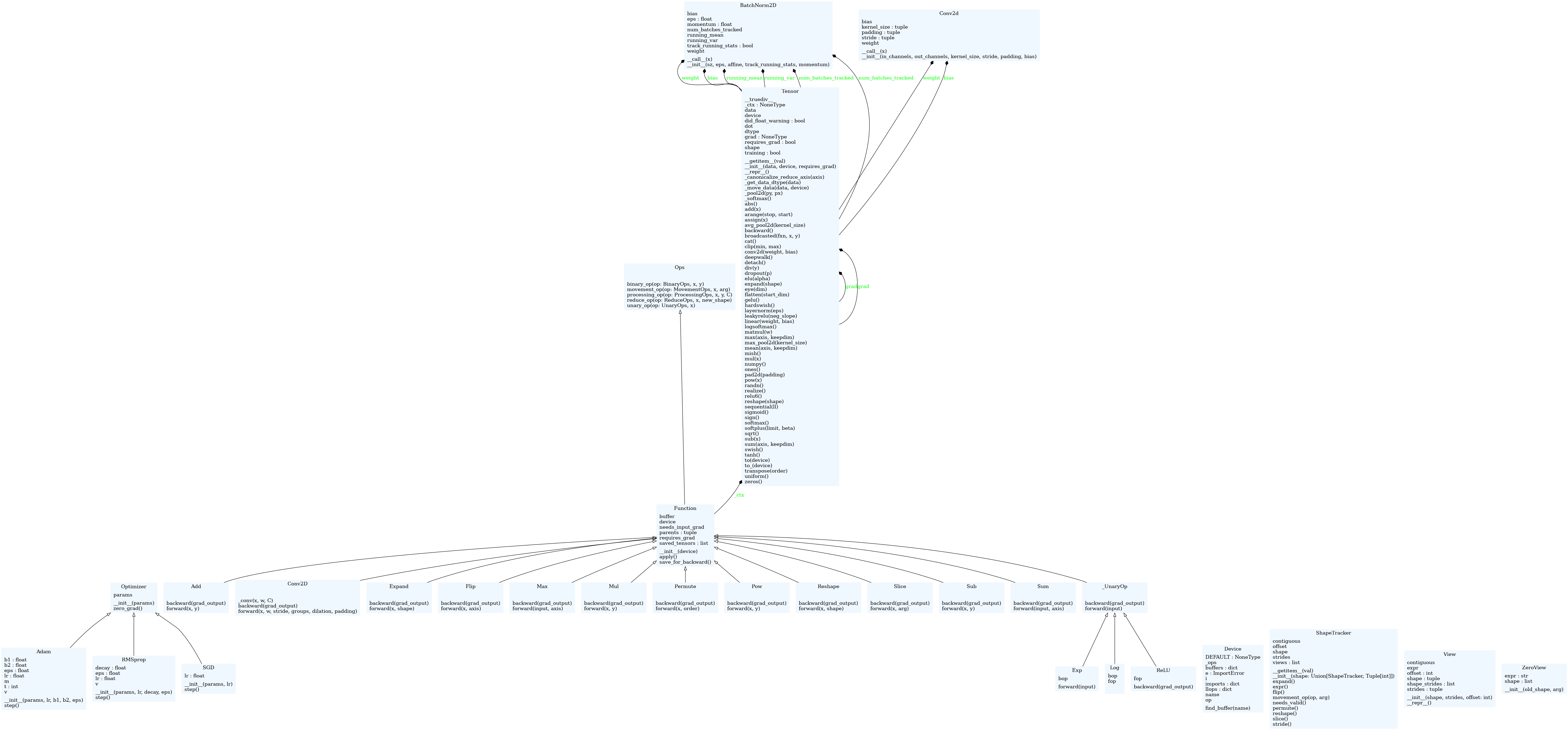

Structure

pyreverse --filter-mode ALL --colorized --max-color-depth 7 --output webp ./tinygrad/

outputs the following code structure:

Accelerator usage

GPU

NVIDIA’s CUDA certainly dominates the realm of training neural networks. Therefore, it is a nice change to see that accelerator support uses OpenCL. Well, is this really GPU-specific? No, some CPUs support certain subsets of OpenCL as well (e.g. via pocl). The interesting aspect of using OpenCL is the support of completely different hardware for accelerating training or inference, e.g. FPGAs. If I remember correctly, some of Intel’s FPGAs could be programmed using OpenCL. However, I’m not sure how easily JIT-compiled PyOpenCL kernels can be converted as input for programming e.g. FPGAs (if they don’t provide any OpenCL capabilities).

However, it looks like CUDA support via PyCUDA is added but not integrated yet.

ANE (Apple Neural Engine)

The tensor class seems to support some ANE operations. It seems like they are building their own ANE abstraction layer to utilize the ANE for training purposes as well.

Seems to be removed/broken

First Steps

NB! If we are using tinygrad within a conda environment, then we have to install an OpenCL implementation (e.g. pocl)

conda install -c conda-forge pocl pyopencl clinfo

clinfo is useful to list OpenCL devices available but it needs to be installed within the conda environment.

If we use the standard example, we can see that there are CPUBuffer and GPUBuffer:

from tinygrad.tensor import Tensor

(Tensor.ones(5000,5000).gpu() + Tensor.ones(5000,5000).gpu()).cpu()

outputs

<Tensor CPUBuffer([[2., 2., 2., ..., 2., 2., 2.],

[2., 2., 2., ..., 2., 2., 2.],

[2., 2., 2., ..., 2., 2., 2.],

...,

[2., 2., 2., ..., 2., 2., 2.],

[2., 2., 2., ..., 2., 2., 2.],

[2., 2., 2., ..., 2., 2., 2.]], dtype=float32) with grad None>

whereas

from tinygrad.tensor import Tensor

Tensor.ones(5000,5000).gpu() + Tensor.ones(5000,5000).gpu())

outputs

<Tensor <GPUBuffer with shape (5000, 5000)> with grad None>

Compiler warnings will be displayed by PyOpenCL if export PYOPENCL_COMPILER_OUTPUT=1:

CompilerWarning: Built kernel retrieved from cache. Original from-source build had warnings:

Build on <pyopencl.Device 'Intel(R) Iris(R) Xe Graphics [0x9a49]' on 'Intel(R) OpenCL HD Graphics' at 0x562deb03ccb0> succeeded, but said:

1:1:112: warning: double precision constant requires cl_khr_fp64, casting to single precision

inline float get_A(__global const float *x, int gid) { int valid = 1; int idx = gid; ; return valid ? x[idx] : 0.0;}

^

1:2:112: warning: double precision constant requires cl_khr_fp64, casting to single precision

inline float get_B(__global const float *x, int gid) { int valid = 1; int idx = gid; ; return valid ? x[idx] : 0.0;}inline float _ewop(int gid,float acc,__global const float *A_g,__global const float *B_g) {float A = get_A(A_g, gid);

^

1:4:19: warning: double precision constant requires cl_khr_fp64, casting to single precision

float acc = 0.0;

^

warn(text, CompilerWarning)

A quick DNN inference example is this one:

ipython3 examples/efficientnet.py https://media.istockphoto.com/photos/hen-picture-id831791190

It seems like export GPU=1 finally works to experience some speed ups.

Results using an Intel i7-1165G7:

8 8.045593 hen

did inference in 1.10 s

with GPU acceleration:

8 8.0455885 hen

did inference in 0.31 s

Conclusions

tinygrad is certainly everything but mature. However, I guess it is best to leave it to everyone themselves to decide if tinygrad is a fucking joke or something desperately needed in a world of overly complex deep learning frameworks.